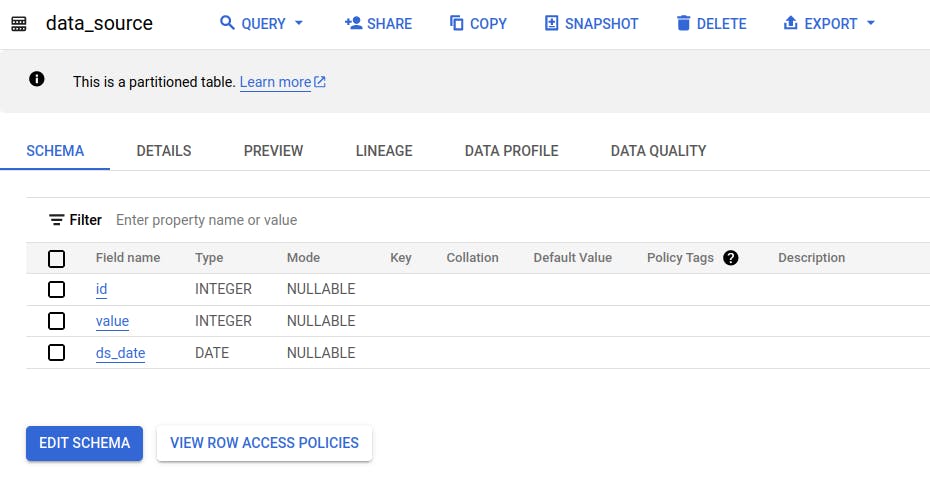

It should be no surprise that understanding your data is very important when working with it. The initial step in my approach to a new dataset always involves examining the data closely. Although the Schema Tab reveals the data schema, to grasp the contents, identify missing data, spot potential issues, and review the cardinality of values, taking a look at the data is unavoidable.

Then comes the issue of cost.

In BigQuery, when working with big tables, you should know that a LIMIT clause does not reduce the amount of data processed (and thus the cost), but merely truncates the output.

There are a couple of ways around that.

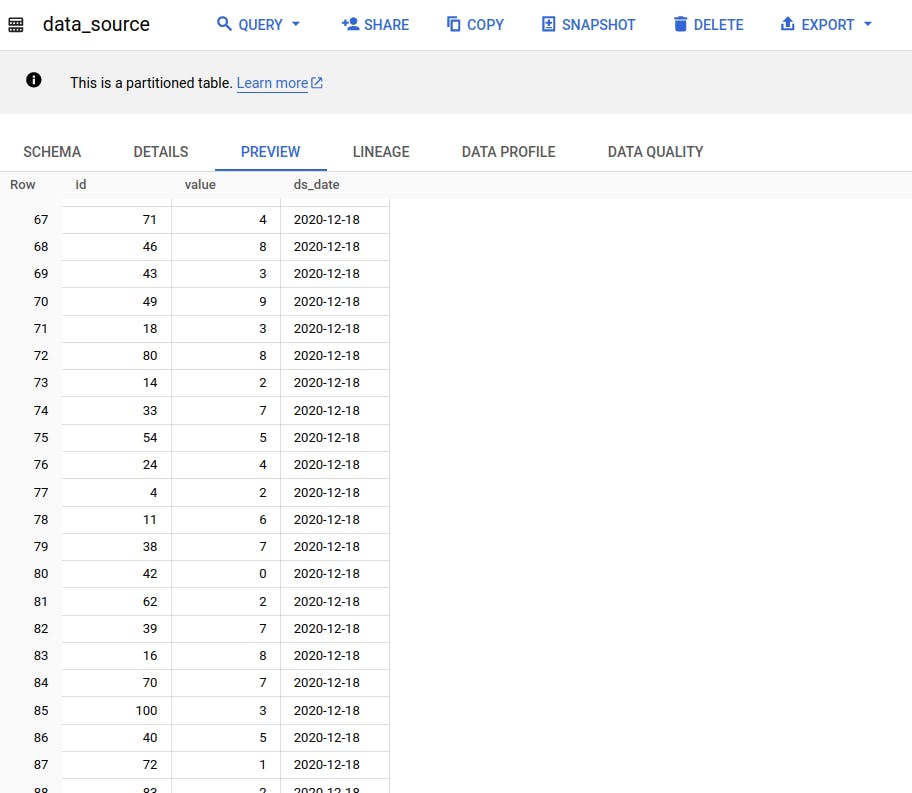

Preview

The simplest is the PREVIEW button, which allows us to see a subset of rows from this particular table. This does not incur any charges.

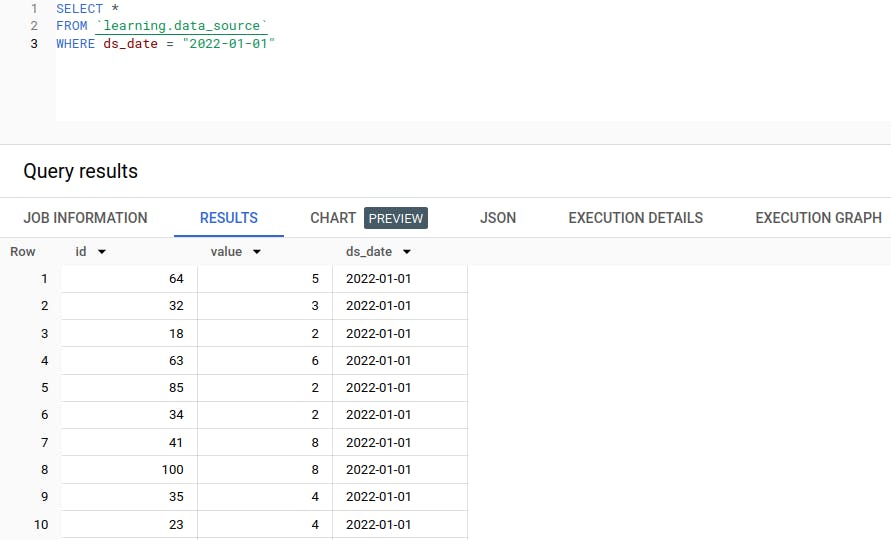

Partitioning

Another way would be leveraging partitions in a partitioned table. By selecting one particular partition in such a table we will achieve partition elimination - BigQuery will ignore all other partitions (dates) and process only the one we are providing, achieving a cost saving for us.

Sampling

A relatively new way would be using Table Sampling. While this is still in Pre-GA (so not fit for production yet), it is still handy when doing exploratory work with data.

How does it work? Using the TABLESAMPLE SYSTEM command, you provide a percentage of rows that you'd like sampled and returned to you.

The SQL command would look like as follows:

SELECT *

FROM `learning.data_source`

TABLESAMPLE SYSTEM (1 PERCENT)

Using this, from my table of ~400k rows the query returned 4k rows.

To summarize, employing these three strategies — Preview, Partition Filtering, and Sampling — together, significantly saves time, computational resources, and consequently, money during the exploratory stage, especially when handling big tables.

Found it useful? Subscribe to my Analytics newsletter at notjustsql.com.